ST-F, CEA,TIMA

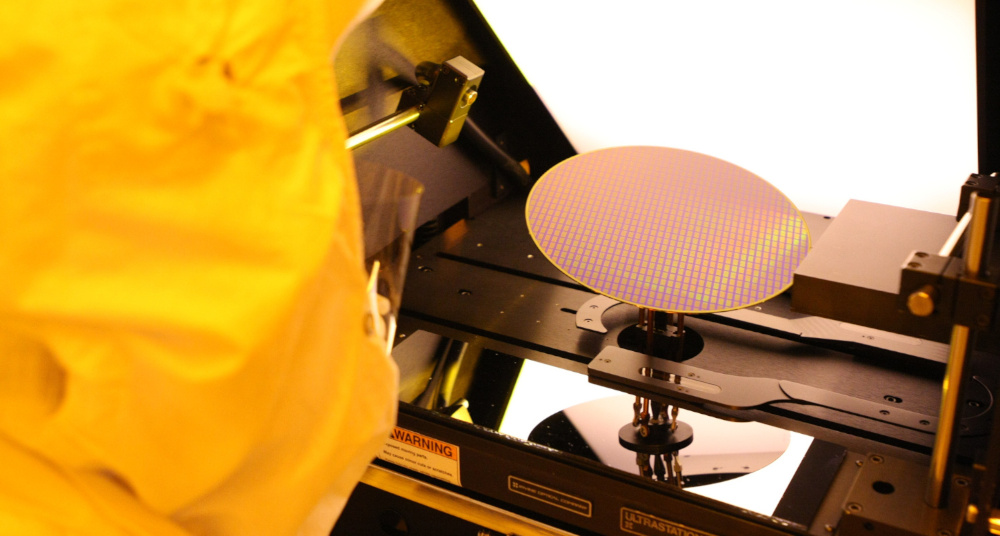

The demonstrator provides a device-integrated solution for the wafer classification problem. Namely, the device will get pictures from a camera and perform real-time data analysis, giving the category to which the wafer default belongs and binary faulty/non-faulty information. As wafer images are sensitive data, and access to the production line itself not possible, the setup will be made of a computer displaying data at production rate in front of which a camera connected to the AI device will be placed. The outcome of the classification, i.e., the category of default wafer features, will be displayed on a monitor connected to the device.

Beyond state-of-the-art developments and impacts

There are two most important innovations related to this demonstrator. The first one is related to integrating the hardware accelerator placed on the FPGA into its software counterpart: we rely on a new approach that wraps the register interface of the hardware into a message-based conduit. This allows to share many driver codes without noticeable performance loss and vary the actual IP interface. The second one is related to the use of highly quantised neural networks. This makes on-device acceleration a viable alternative because the basic neural network operations' actual implementation takes only a few tens of lookup tables. Still, this does not permit large networks to fit into small FPGA, and in the absence of a better alternative, we must rely on a human hardware/software partitioning of the neural network (NN).